STARTUPS

The mission of our ETH spin-off LatticeFlow is to enable organizations to deliver safe, robust, and reliable AI systems. To this end, LatticeFlow collaborates with leading enterprises such as Swiss Federal Railways (SBB) and Siemens, and government agencies such as the US Army and Germany's Federal Office of Information Security (BSI).

You can read more about our vision and product on TechCrunch and ETH News.

Publications

Shared Certificates for Neural Network Verification,

CAV 2022

Marc Fischer*, Christian Sprecher*, Dimitar I. Dimitrov, Gagandeep Singh, Martin Vechev

Data Leakage in Federated Averaging,

arXiv 2022

Dimitar I. Dimitrov, Mislav Balunović, Nikola Konstantinov, Martin Vechev

(De-)Randomized Smoothing for Decision Stump Ensembles,

arXiv 2022

Miklós Z. Horváth*, Mark Niklas Müller*, Marc Fischer, Martin Vechev

Robust and Accurate - Compositional Architectures for Randomized Smoothing,

SRML@ICLR 2022

Miklós Z. Horváth, Mark Niklas Müller, Marc Fischer, Martin Vechev

Boosting Randomized Smoothing with Variance Reduced Classifiers,

ICLR (Spotlight) 2022

Miklós Z. Horváth, Mark Niklas Müller, Marc Fischer, Martin Vechev

Complete Verification via Multi-Neuron Relaxation Guided Branch-and-Bound,

ICLR 2022

Claudio Ferrari, Mark Niklas Müller, Nikola Jovanović, Martin Vechev

Provably Robust Adversarial Examples,

ICLR 2022

Dimitar I. Dimitrov, Gagandeep Singh, Timon Gehr, Martin Vechev

Fair Normalizing Flows,

ICLR 2022

Mislav Balunović, Anian Ruoss, Martin Vechev

Bayesian Framework for Gradient Leakage,

ICLR 2022

Mislav Balunović, Dimitar I. Dimitrov, Robin Staab, Martin Vechev

LAMP: Extracting Text from Gradients with Language Model Priors,

arXiv 2022

Dimitar I. Dimitrov*, Mislav Balunović*, Nikola Jovanović, Martin Vechev

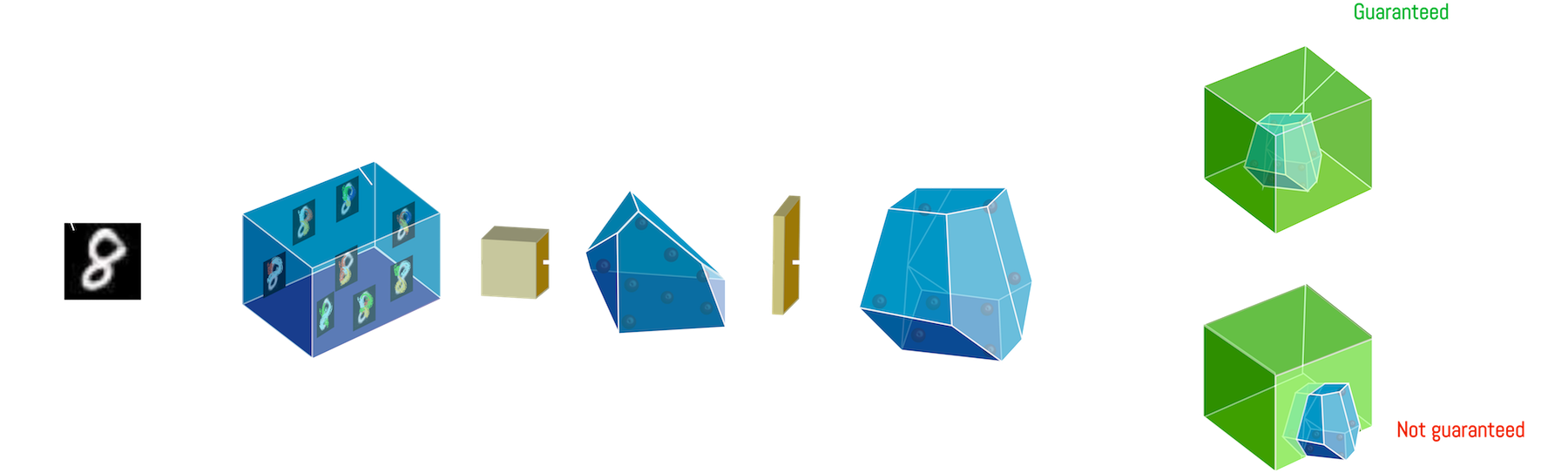

PRIMA: General and Precise Neural Network Certification via Scalable Convex Hull Approximations,

POPL 2022

Mark Niklas Müller, Gleb Makarchuk, Gagandeep Singh, Markus Püschel, Martin Vechev

The Fundamental Limits of Interval Arithmetic for Neural Networks,

arXiv 2021

Matthew Mirman, Maximilian Baader, Martin Vechev

Automated Discovery of Adaptive Attacks on Adversarial Defenses,

NeurIPS 2021

Chengyuan Yao, Pavol Bielik, Petar Tsankov, Martin Vechev

Bayesian Framework for Gradient Leakage,

NeurIPS Workshop on New Frontiers in Federated Learning 2021

Mislav Balunovic, Dimitar I. Dimitrov, Robin Staab, Martin Vechev

Robustness Certification for Point Cloud Models,

ICCV 2021

Tobias Lorenz, Anian Ruoss, Mislav Balunovic, Gagandeep Singh, Martin Vechev

Effective Certification of Monotone Deep Equilibrium Models,

arXiv 2021

Mark Niklas Müller, Robin Staab, Marc Fischer, Martin Vechev

Scalable Polyhedral Verification of Recurrent Neural Networks,

CAV 2021

Wonryong Ryou, Jiayu Chen, Mislav Balunovic, Gagandeep Singh, Andrei Dan, Martin Vechev

Automated Discovery of Adaptive Attacks on Adversarial Defenses,

AutoML@ICML (Oral) 2021

Chengyuan Yao, Pavol Bielik, Petar Tsankov, Martin Vechev

Scalable Certified Segmentation via Randomized Smoothing,

ICML 2021

Marc Fischer, Maximilian Baader, Martin Vechev

Fair Normalizing Flows,

arXiv 2021

Mislav Balunovic, Anian Ruoss, Martin Vechev

Fast and Precise Certification of Transformers,

PLDI 2021

Gregory Bonaert, Dimitar I. Dimitrov, Maximilian Baader, Martin Vechev

Certified Defenses: Why Tighter Relaxations May Hurt Training,

arXiv 2021

Nikola Jovanović*, Mislav Balunović*, Maximilian Baader, Martin Vechev

Boosting Randomized Smoothing with Variance Reduced Classifiers,

arXiv 2021

Miklós Z. Horváth, Mark Niklas Müller, Marc Fischer, Martin Vechev

Certify or Predict: Boosting Certified Robustness with Compositional Architectures,

ICLR 2021

Mark Niklas Müller, Mislav Balunovic, Martin Vechev

Robustness Certification with Generative Models,

PLDI 2021

Matthew Mirman, Alexander Hägele, Timon Gehr, Pavol Bielik, Martin Vechev

Scaling Polyhedral Neural Network Verification on GPUs,

MLSys 2021

Christoph Müller*, François Serre*, Gagandeep Singh, Markus Püschel, Martin Vechev

Efficient Certification of Spatial Robustness,

AAAI 2021

Anian Ruoss, Maximilian Baader, Mislav Balunovic, Martin Vechev

Scaling Polyhedral Neural Network Verification on GPUs,

MLSys 2021

Christoph Müller, Francois Serre, Gagandeep Singh, Markus Püschel, Martin Vechev

Learning Certified Individually Fair Representations,

NeurIPS 2020

Anian Ruoss, Mislav Balunovic, Marc Fischer, Martin Vechev

Certified Defense to Image Transformations via Randomized Smoothing,

NeurIPS 2020

Marc Fischer, Maximilian Baader, Martin Vechev

Adversarial Attacks on Probabilistic Autoregressive Forecasting Models,

ICML 2020

Raphaël Dang-Nhu, Gagandeep Singh, Pavol Bielik, Martin Vechev

Adversarial Robustness for Code,

ICML 2020

Pavol Bielik, Martin Vechev

Adversarial Training and Provable Defenses: Bridging the Gap,

ICLR 2020Oral presentation

Mislav Balunovic, Martin Vechev

Scalable Inference of Symbolic Adversarial Examples,

arXiv 2020

Dimitar I. Dimitrov, Gagandeep Singh, Martin Vechev

Universal Approximation with Certified Networks,

ICLR 2020

Maximilian Baader, Matthew Mirman, Martin Vechev

Robustness Certification of Generative Models,

arXiv 2020

Mathew Mirman, Timon Gehr, Martin Vechev

Beyond the Single Neuron Convex Barrier for Neural Network Certification,

NeurIPS 2019

Gagandeep Singh, Rupanshu Ganvir, Markus Püschel, Martin Vechev

Certifying Geometric Robustness of Neural Networks,

NeurIPS 2019

Mislav Balunovic, Maximilian Baader, Gagandeep Singh, Timon Gehr, Martin Vechev

Online Robustness Training for Deep Reinforcement Learning,

arXiv 2019

Marc Fischer, Matthew Mirman, Steven Stalder, Martin Vechev

DL2: Training and Querying Neural Networks with Logic,

ICML 2019

Marc Fischer, Mislav Balunovic, Dana Drachsler-Cohen, Timon Gehr, Ce Zhang, Martin Vechev

Boosting Robustness Certification of Neural Networks,

ICLR 2019

Gagandeep Singh, Timon Gehr, Markus Püschel, Martin Vechev

An Abstract Domain for Certifying Neural Networks,

POPL 2019

Gagandeep Singh, Timon Gehr, Markus Püschel, Martin Vechev

Fast and Effective Robustness Certification,

NeurIPS 2018

Gagandeep Singh, Timon Gehr, Matthew Mirman, Markus Püschel, Martin Vechev

Differentiable Abstract Interpretation for Provably Robust Neural Networks,

ICML 2018

Matthew Mirman, Timon Gehr, Martin Vechev

AI2: Safety and Robustness Certification of Neural Networks with Abstract Interpretation,

IEEE S&P 2018

Timon Gehr, Matthew Mirman, Dana Drachsler-Cohen, Petar Tsankov, Swarat Chaudhuri, Martin Vechev